Consequences of the wrong status code for robots.txt

If you have a website, you may have heard of something called “robots.txt”. If you are interested in SEO, you definitely have. But I doubt you realize how important it is. If you already know your way around robots.txt, then skip down to read about the consequences of the wrong status code – I guarantee, that this will be an eye opener… and for easily adding this info to your daily work routine I have added an infographic of the status codes’ impact on Googlebot (go to the bottom of this post).

What robots.txt is used for

Robots.txt is a small file that, if you choose to have it, MUST be accessible at www.yourwebsite.xxx/robots.txt.

The purpose of the file is to specify which pages Google is allowed to crawl and probably more important – which pages Google is not allowed to crawl.

However, compliance with robots.txt is entirely voluntary on the part of the crawler, so it requires that those who have programmed the crawler have built it to comply with robots.txt commands… Fortunately, the major search engines do comply with robots.txt.

For example, you can, if you type in the following in robots.txt, prevent search engines from crawling your entire site:

User-agent: *

Disallow: /

A “user agent” is a piece of text that identifies a visitor (in this case a crawler) – think of it as a name. When the crawler requests to see content from a (web address on a) server, it tells the server its name (what its user-agent is).

The asterisk * means “matches everything”. The command User-agent: * means that it matches all “user-agents” – which is every crawler that comes by.

Commands in “Disallow” are relative URLs that start in the root. This means that “/” covers anything beyond the root – ie. all content. Read more about robots.txt here.

The above example thereby blocks all crawling since it prohibits crawling of all content by all user agents.

Robots.txt is therefore a file where, if you mistype, you can inadvertently really hurt your rankings in Google… Conversely, you can also optimize visibility in Google if you have a lot of pages that do nothing besides wasting Google’s time crawling them – e.g. filter and sorting pages on a large eCom site.

How Google uses robots.txt

Every time Googlebot needs to follow a link to your site (crawl it), it starts by checking www.yourwebsite.xxx/robots.txt to make sure it really is allowed to crawl the link it is about to crawl.

One of the reasons for Googlebot checking robots.txt is that it will not run the risk of crawling and indexing sensitive content that may cause problems for Google or the website owner.

However, website owners might not have knowledge of robots.txt, so they have not added one – in which case the file will not exist on the website. When this happens, Google will ask the web server to view the content at www.yourwebsite.xxx/robots.txt, where the server typically responds with a status code 404 – that the content does not exist. This will be interpreted by Google as a permission for Googlebot to crawl the content – on the assumption that people who do not care about robots.txt probably still want their content to be found in Google.

On the other hand, if your robots.txt exists, your server will respond to Google’s request with a status code 200 and Googlebot will crawl based on the rules in the robots.txt file.

BUT, there are also situations where the server has an error and therefore does not know whether the robots.txt file exists or not. When this happens, the server will respond with a status code 5xx (for example a code 500).

Remember that Google checks robots.txt to make sure it’s not accessing sensitive content!

If Googlebot encounters a status code in the 500 area (for robots.txt), it will defer to crawl the site and wait for robots.txt to respond again with a status code 200 or a code in the 400 area…

Let’s back up: With a status code 500, Google stops crawling the site!

Consequences of the wrong status code

If your robots.txt returns a server error in the 500 area, Google stops crawling the site and besides obviously affecting your SEO, it has several other consequences. Below are some of the things which happen:

- Googlebot stops crawling the site and therefore changes to the content are not indexed.

- “Test Live URL” in Google Search Console does not work.

- Your ads in Google Ads are disapproved for lack of crawlability, mobile readiness and/or the quality of the pages.

- AdSense changes are rejected.

The reason is that “Googlebot” is the infrastructure in the Google service network, so all crawling-dependent services are affected.

I recently had a case where the above just happened to a large customer. Over 300 ads in Google Ads were rejected. The rejection was explained by Google support as partly because the pages were slow and partly as by being blocked by robots.txt for mobile crawling.

I knew robots.txt did not exclude mobile crawlers, so I rushed to check robots.txt – which gave me an error code 500.

I knew it was critical, so we got it fixed ASAP and we ran the ads past Google support again – which could now approve them with the answer:

Thank you for your answer and follow-up question.

The pages were approved when you had the robots.txt issue fixed.

I hope this has solved your challenge. If you have any further questions, please contact us again.

If you are to believe Google’s robots.txt specification, the problem will be limited to 30 days – but few online businesses want to do without Google’s services for 30 days.

Therefore, besides knowing how robots.txt can be used, it is super important that you are also aware of the dire consequences, if your robots.txt does not respond with the correct status code.

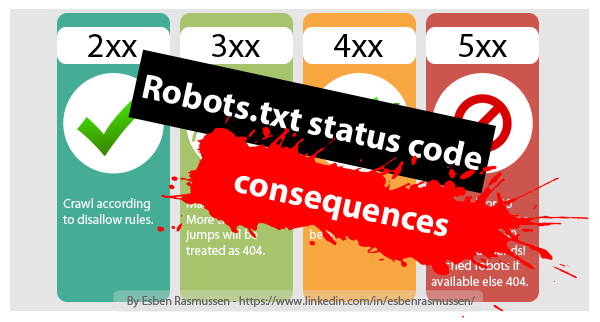

I’ve created the infographic below to quickly keep track of how different robots.txt status codes affect Googlebot. Feel free to download it.

Do you know anyone who could benefit from this knowledge – whether they are other nerds like me, IT guys (who should be keeping your robots.txt alive) or just people running a website – then please ask them, how Google will behave, if robots.txt responds with a status code 500.

BloggingXP says:

Because of Some Issues One of my Website vps down for almost 4-5 days. That site lost almost all ranking and traffic from 20K Per day to 500. Its almost 25+ days but still no ranking recovery. How google respond to such errors.

Esben Rasmussen says:

Wow, sorry to hear about that.

It sounds weird that Google should punish your site for “just” being down for a couple of days for a prolonged period afterwards. Have you tried to look into GSC to see the if the amount of error pages has returned to normal? If they haven’t, I would concentrate my energy on getting Google to understand, that your entire site is back up and does not have those errors any longer.